local and private LLMs for beginners, with koboldcpp and SillyTavern

you'll probably just use it for ERP

Part 1: intro, basic concepts

but why?

system requirements

basic vocab and concepts

Did you know you can run your own text AI on your home computer, completely offline, completely private, using free open-source software?

I think there are already probably numerous guides that teach similar things, but a lot of them seem to assume the reader already knows certain things, and others mostly focus on just teaching just the blind-follow software setup steps, so once you do get it set up, you don’t really know what you’re doing.

This essay aims to be a beginner-level, relatively non-technical, learn-as-you-go crash course on basic LLM vocab and concepts. This will assume you're using a relatively modern Windows PC, although Mac users may be able to follow along if they know how to do the same tasks in Mac OS.

Conciseness isn’t one of my best skills, so don’t expect a quick guide.

but why?

Maybe you…

greatly value your privacy

want to try an unfiltered AI that doesn't talk to you like a sterilized robot or therapist. The AI can take on any role or character you want!

want to learn more about this new tech

want to experiment with using AI as an assistant for creative writing or coding. You can actively participate in your stories as a creative co-writer, not just a passive reader!

need a social, mental, or fantasy outlet of some sort. Maybe you don't know someone who can fulfill this need, or you don't trust the people in your life. Or maybe you live in a part of the world where asking for help will get you sent to some sort of re-education camp. I don’t recommend LLMs for medical advice, but they can be good non-judgmental listeners, or someone to vent to.

The vast majority of online AI chat apps and services aren't very good about privacy and ownership of your data. The worst apps and services straight up tell you that they own everything you say. Slightly better services claim your data is private and yours, but still have all sorts of sketchy and vague clauses in their terms of service or privacy policies.

And there are a few services that really do seem to genuinely respect your privacy and data, but the fact still remains that your data is being transmitted across the internet, hitting a server somewhere, and back again. While these systems are reasonably secure, it still requires a level of faith and trust in these systems.

Furthermore, the services and apps I used gave me limited control over the AI. It was frustrating to have these developers tell me how I should use my software. I was even told by one developer that my feature requests would "devalue” the AI's responses, even while their AI was blatantly ignoring things I'm saying, disregarding my requests, and so on… causing me to devalue the AI.

I've also tried other closed-source one-click solutions that are meant to be easy, but these apps were buggy, and I couldn't opt out of their telemetry (tracking my app usage). These apps were probably designed to lock me into their software ecosystem so they could make money.

I got fed up and just decided to do what I should've done from the start... and I found out it wasn't as difficult as I thought. Hopefully this makes it a bit easier for someone to take their first few steps.

By the way, this guide is inspired somewhat by an old book called MS-DOS 5.0 for Dummies, written by Dan Gookin. It helped me learn that operating system when I was a kid, and it was a much more friendly and palatable companion to the one-inch thick DOS manuals. Thanks, Dan; your name is weird but your book is pretty rad.

system requirements

This part does assume you know basic facts about your computer, like RAM vs VRAM, GPU vs CPU, etc.

At the bare minimum, I would recommend at least 8 GB of free RAM. Press Ctrl + Shift + Esc to open the Task Manager → Performance tab → Memory to check how much you have. I think the Mac equivalent is Activity Monitor. You can get by with less memory, but smaller AI models will be very unimpressive.

Ideally, you want a recent graphics card with 8 GB+ video memory (GPU tab in Task Manager). Video memory (VRAM) is much faster; for example, using my GPU is about 10x faster. It used to be Nvidia had a serious edge in this particular use, but things are getting better and you should be able to use any GPU.

However, integrated GPUs or AMD APUs won’t be nearly as fast because they don’t have dedicated VRAM.

If you have a Mac Studio with unified memory or AMD Epyc multi-channel memory setup, the speed is somewhere between the two, but you may be able to run much larger AI models than on a GPU alone.

There has been significant progress in optimizing LLMs, making them much smaller, faster, and more intelligent, so even if your experience is not quite what you expected now, things will probably improve surprisingly quickly.

basic vocab and concepts

You will probably encounter these concepts frequently in your LLM adventures. You don’t need to fully understand all this right away; it’s enough to get the basic idea.

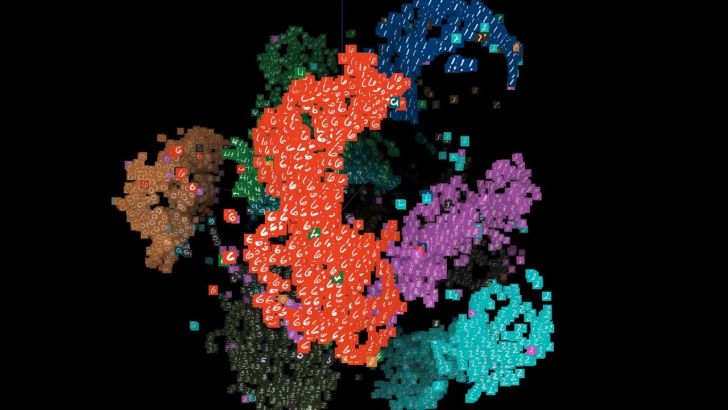

Yes, AI is actually learning; AI are not actually storing copies of whatever they’ve learned, although some people seem to believe this. A quick, visual, non-technical demonstration:

LLM, or Large Language Model: Basically, the current generation of text and language AI. At their core, they currently are "text completion AI," meaning if you start by writing "once upon a time," it will probably recognize it's a fairy tale of some sort, and follow up with appropriate text. Even when you’re in some sort of simulated chat, under the hood, the AI "sees" it as a document to complete.

Model: This contains the data or "weights" as shown in the video above. Basically, it's the set of accumulated knowledge from its initial training process, which you’ll download as a file onto your computer later.

Parameters: Models will be listed in formats like 7B, 13B, 70B, etc., which indicate how many billions of parameters are contained in each model. More parameters generally means more complexity, more intelligence, more nuanced use of language, and… higher system requirements.

Currently, I think the 7-9B region is generally the lowest boundary to get a reasonably intelligent chat experience.

Quantization, or quants: Quantization compresses data in a destructive way, leaving enough useful data, but greatly reducing the file size. Think of the "needs more JPEG" meme, where a JPEG is re-compressed over and over until it's scrambled. But normally, this compression only happens once and most people can’t tell a high quality JPEG from the original raw image.

Model quants will be listed as Q4, Q8, FP16 etc., indicating the bits of precision. So a Q4 quant will be 25% the size and precision of an FP16, but the quality is still quite high. Quality loss accelerates rapidly below Q4, so Q4 is the sweet spot for most normal people. Not to mention, smaller quants take up less memory and run faster!

If you have enough VRAM and want a smarter model, it’s usually better to increase the parameter count instead of getting a larger quant. So rather than going for a 9B Q8, it’s usually better to find a 20B at Q4 at equivalent file size. However, newer and smaller models can be just as good or better than older, larger models. Some people even say largers quants preserve more logic abilities in LLMs, but I don’t know if there have been any empirical tests to prove this.

Token: Current LLMs don't see words, but rather words and punctuation are assigned to numbers, which is easier for the computer to use. Every input and output from the AI is converted to and from numeric tokens by a "tokenizer". Very roughly, a token is equivalent to one word.

Prompt: This is basically a set of instructions to the AI, but it's easier to understand with:

Context: Remember the text completion thing above? To make a less abstract example, visualize "context" as three sheets of paper.

The first page contains the prompt that describes the text, or provides instructions to the readers — you and the AI.

Let's say the second page is where the AI's response starts. Depending on the setup and prompt, you and AI will take turns completing the text.

As the text fills up the third page, the AI will discard the second page (essentially forgetting it), and add another blank page. This means a large context is important for a coherent conversation. Context size is measured in tokens; I recommend 4k at minimum, but there are ways to get around these limits, kinda.

Fun fact: When the AI generates text, it’s doing so word-by-word. For every word generated, it will "read" the entire context again. If that sounds kinda inefficient… maybe it is.

Hallucination: A sort of misnomer for when the AI seems to fabricate info, especially if it tries to refer to something that was dropped from context, or never learned about it. But the AI still does its best to create something relevant to the current context and prompt, which can sometimes be an advantage for creative purposes.

Humans actually have very similar behaviors, one called confabulation, where we don’t always realize (or admit) that our brains have created new info. Hopefully I’ll get around to writing an essay about this sometime.

Oh right, we were supposed to set up some software.